|

|

September 30, 2003google buys kaltixThis past summer I was working and living down in Palo Alto, and through a housemate became acquainted with a little search engine start-up named Kaltix. I blogged about it back in July, and a reporter from c|net wrote an article about them. And today it was announced that Kaltix was acquired by Google. Now that Google has acquired the personalization know-how of both Kaltix and the failed start-up Outride, they are situated quite nicely for issuing in an era of personalized search. I'm eagerly awaiting their roll-out of these technologies. And though financial terms of the deal were not disclosed, I have a feeling that the average net worth of my friends and acquaintances took quite a jump today. Congrats... p.s. and thanks to googler.blogs.com, whose trackback ping alerted me to the acquisition.

Posted by jheer at 05:21 PM

| Comments (1)

September 29, 2003escher + legosAll your escher favorites, rendered using everyone's favorite childhood toys. The links include the descriptions of how each piece was built, and what geometric or camera trickery was involved.

relativity | belvedere | ascending | waterfall Thanks go out to guille for the links!

Posted by jheer at 03:32 PM

| Comments (7)

leaky house?BBC NEWS | Americas | White House denies agent leak 1. Why do I get the feeling that this will fail to rise to a higher importance level than presidential sexual habits?

Posted by jheer at 12:31 PM

| Comments (0)

September 28, 2003go bearsCalifornia stuns No. 3 USC 34-31 in 3OT I was at this amazing game yesterday, watching from "Tightwad Hill" above the stadium. The sun came out just in time for the game and the Bears did not disappoint. Apparently our gang on the hill was on television, too... though we may not have been in our most telegenic mode :)

Posted by jheer at 02:07 PM

| Comments (2)

September 26, 2003metamarathonMy friend Amanda is hardcore:

Posted by jheer at 01:28 PM

| Comments (0)

tech-5I love these kinds of articles, even when they are largely re-hash. This one focuses on 3-D printing, biosimulation, autonomic computing, fuel cells, and RFID tags. My quick take: 3-D printing is bad-ass, autonomic computing is in many ways overdue, I heart fuel cells, and RFID still needs to satisfactorily address privacy issues to make it into retail. Fast Company | 5 Technologies That Will Change the World

Posted by jheer at 01:11 PM

| Comments (0)

September 25, 2003back to my lozengeThe Chronicle declared war on my department today, claiming my home on campus the worst eye-sore perpetrated on the local neighborhood. Looking at this houselike building with creamy stucco and pitched roofs and deep-set wooden windows, you sense that Cal is trying to atone for past sins -- understandably so. The Goldman School sits above Hearst Avenue, a historic dividing line between campus and the Northside neighborhood that has been blurred by a murderer's row of oversize mistakes. The worst is Soda Hall at Hearst and Le Roy avenues, looking to all the world like a four-story pile of bilious green lozenges. Learning their lessons in building off campus I'm not claming the building is beautiful (many friends have claimed it looks like a very high-class bathroom), but this is overkill. So in response I'm calling for a jihad against this reporter. But go ahead and judge for yourself...

Posted by jheer at 11:37 PM

| Comments (0)

September 24, 2003spam illegalwe'll see how effective this actually turns out to be... California Bans Spam, Sets Fines

Posted by jheer at 03:47 PM

| Comments (0)

concert: radioheadLast night I was treated to a performance by my favorite contemporary rock deities: radiohead. As expected, they did not disappoint. After braving the shoreline amphitheatre parking, we arrived in the amphitheatre about two minutes before radiohead took the stage. We then snaked our way to a nice spot above center stage, with a clear view of the band and all the displays (crucial for seeing Thom's piano-cam). They opened with the first two tracks from Hail to the Thief, great starter songs (I'm addicted to "2 + 2 = 5" and had to restrain myself from flailing about uncontrollably). Early in the set they played "Lurgee", which surprised me since you don't often see them play songs from Pablo Honey. One highlight of the main set was a great rendition of "My Iron Lung" from The Bends. The only drawback was that the crowd just kind of stood there contentedly, rather than completely rocking out as the music warranted. Another highlight was in the midst of "No Suprises" where "tear down the government" brought an unbridled wave of cheering. GWB is obviously a popular guy with the fans. The first set wrapped up with a truly wonderful version of "There There". Things got even better through the encores. The last time I saw radiohead, for their Amnesiac tour, their rendition of "You and Whose Army?" was a heart-wrenching emotional experience, practically bringing the whole audience to tears. This time it was a gas, with Thom making faces into the piano cam between lyrics. Didn't know that song could span such a range of experiences. This was followed by "The National Anthem" which always rocks. The most gut-wrenching song of the night for me, though, was "A Wolf At The Door". Watching, hearing, feeling Thom venomously spit out the spoken-word, psuedo-rap of the verses very convincingly communicated the anger and helplessness underlying the song. Finally the second encore brought out old favorites "Airbag" (I called it before it came on!) and "Everything In It's Right Place". And everything was. Perhaps not "forever" as the scrolling on-stage display advertised, but at least for the whole drive back home to Berkeley. Here is my attempt at reconstructing the set list. I will update this once I can get the middle songs back in order (I'm trying to do this all from memory). 2 +2 = 5 [ dunno the order here ] There There First Encore: Second Encore:

Posted by jheer at 01:07 PM

| Comments (2)

September 23, 2003quicksilverA review of Neal Stephenson's new book (part one of a trilogy named "the Baroque Cycle"), including numerous comparisons to Hofstadter's GEB. Stephenson has left his cyberpunk, nanotech, and cryptographic futures for things of the past, opting instead for a romp through the days of scientists of yore, including the Royal Society of Isaac Newton. The World Outside the Web - Neal Stephenson's new book upends geek chic. Stephenson's unspoken premise is that 1990s California had nothing on 1660s Europe. But jeez, Neal, 3,000 pages? Newton invented calculus in less time than it'll take to read about it.

Posted by jheer at 05:56 PM

| Comments (0)

September 22, 2003iqI ran across an ad for an online IQ test, and having never taken such a test before (which I guess is a lie, since I have taken the PSAT, SAT, GRE, ...) I decided to check it out. It was only 40 questions, mostly logic and math (including visual reasoning). I know nothing of the validity of the results... but read the extended entry if you care to know how it turned out.

This number is the result of a formula based on how many questions you answered correctly on Emode's Ultimate IQ test. Your IQ score is scientifically accurate; to read more about the science behind our IQ test, click here. During the test, you answered four different types of questions — mathematical, visual-spatial, linguistic and logical. We analyzed how you did on each of those questions which reveals how your brain uniquely works. We also compared your answers with others who have taken the test, and according to the sorts of questions you got correct, we can tell your Intellectual Type is Visual Mathematician. This means you are gifted at spotting patterns — both in pictures and in numbers. These talents combined with your overall high intelligence make you good at understanding the big picture, which is why people trust your instincts and turn to you for direction — especially in the workplace. And that's just some of what we know about you from your test results.

Posted by jheer at 03:56 PM

| Comments (0)

September 21, 2003model mayhemNew Findings Shake Up Open-Source Debate Our model "shows that closed-source projects are always slower to converge to a bug-free state than bazaar open-source projects," say theoretical physicists Damien Challet and Yann Le Du. When I have some free time (yeah right), it would be nice to go back and examine their model in further depth to see if I buy their study or not.

Posted by jheer at 08:34 PM

| Comments (0)

September 19, 2003invoking cincinnatusIn response to Clark's entry into the potentio-presidential parade, a nice little article at Slate reviews the history of "4 star" American presidents.

Posted by jheer at 07:14 PM

| Comments (0)

one hell of a petApparently buffalo-sized guinea pigs once roamed the marshes of ancient Venezuela, until North American predators arrived to wipe them out.

Posted by jheer at 06:58 PM

| Comments (0)

phishing for kids?... the courts didn't think so. Phish Bassist Cleared Of Child-Endangerment Charges

Posted by jheer at 06:44 PM

| Comments (0)

September 18, 2003smearing clarkCame across this blog entry detailing what kind of smear campaigns may be run against Wesley Clark, some of which, according to the post, have already been deployed by Rush Limbaugh.

Posted by jheer at 11:24 AM

| Comments (0)

September 17, 2003my windowThis is the view from my dining room: Did I mention I love my new house? And soon I will be able to scientifically explain the beautiful view... Check out this exerpt from a homework problem in my computer vision class: If one looks across a large bay in the daytime, it is often hard to distinguish the mountains on the opposite side; near sunset they are clearly visible. This phenomenon has to do with scattering of light by air--a large volume of air is actually a [light] source. Explain what is happening. Not that you care, but I will let you know the answer once I get around to figuring it out (ostensibly before the Oct. 1 due date). UPDATE (9/17): I think I solved the problem. It's pretty interesting. However, I realized that I can't post it before Oct. 1, because then it could be construed as providing an avenue for others to cheat. Just made me realize that though I am quite cognizant of the fact that this blog is world-readable, I tend to conceptualize it as more of a private forum. But enough with the meta-reasoning.

Posted by jheer at 07:44 PM

| Comments (0)

i passedI just found out I passed my prelims!! Fortunately my pre-notification celebrations this past weekend weren't in vain. Now I guess I actually have to do something about getting a Ph.D. instead of just talking about it.

Posted by jheer at 05:12 PM

| Comments (1)

September 16, 2003science vs. godI ran across a couple of interesting articles at wired.com in the old science meets religion department. One older article posits that science has recently become more open to religion: The New Convergence. Another article talks about the recent confluence of science and Buddhism, including fMRI studies of meditating monks: Scientists Meditate on Happiness. For those interested in such things, I'd also recommend The Tao of Physics by Fritjof Capra, which explores parallels between modern physics and Eastern religious beliefs. The edition I read was written before quarks had reached general scientific acceptance, which does have some bearing on the author's arguments... I'd be interested to see if any newer editions react to more recent scientific developments (and unproven ideas such as string theory). I think my favorite quote on the matter, however, comes from the judge on the Simpsons: As for science versus religion, I'm issuing a restraining order. Religion must stay 500 feet away from science at all times.

Posted by jheer at 08:28 PM

| Comments (0)

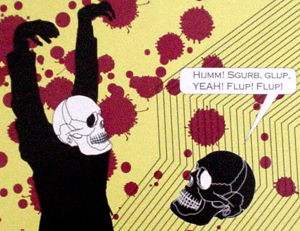

wrestling as artI guess I should get around to posting something about this past weekend. Last Wednesday night, in my post-prelims glory (and hence the beginning of my weekend), I went to the SFMOMA to watch Mexican professional wrestling. SFMOMA and wrestling? Not two things you might normally associate with each other (though that being said, I have friends who've been to raves there more than once). Regardless, it was a blast. The wrestling was incredibly acrobatic, the wrestlers--despite (or because of?) their masks--were quite charismatic, and the ring floor was designed for maximizing the volume of all cracks, bangs, and smashes. I'd love to have a floor like that in my living room (much to the dismay of the inlaw apartment beneath us). Here's part of the placard they were handing out at the match: Afterwards, a friend and I were wondering why the SFMOMA hosted the event. Some hypotheses were: (1) to take an important cultural phenomenon and place it in a new context, highlighting it's cultural and theatric value, (2) MOMA is trying to popularize itself, or, my favorite, (3) Mexican wrestling is just damn cool. Who could turn it down? But what do I know of art...

Posted by jheer at 06:01 PM

| Comments (0)

dead leavesI also went to the White Stripes concert this weekend, where I met up with some fellow friends and bloggers. It was great. There was the weird yet ultimately endearing "tour of the 80's" opening act, whose lead singer I christened "Rotten Bowie". Then there was the White Stripes, who fucking rocked. Though I rather detest a lot of radio rock, I must admit a soft spot for the Stripes--especially the insidious "Hardest Button to Button". But the strength of the concert as a whole rested on Jack's ability to summon the spirit (if not the virtuosity) of old blues men and throw it right at you. The Johnny Cash tribute ("I Got Stripes") was well done, too. Read Conley's blog for another perspective on the night, and the spoken-word Johnny Cash lyrics.

Posted by jheer at 05:57 PM

| Comments (0)

clark says yesSalon.com News | Gen. Wesley Clark to seek White House Interesting. I just hope Democratic in-fighting doesn't create a mess that rolls over into the main election.

Posted by jheer at 09:35 AM

| Comments (0)

open searchThe main guy behind this is Doug Cutting, who also developed the Lucene search engine and worked on the Scatter/Gather clustering work at Xerox PARC.

Posted by jheer at 08:15 AM

| Comments (0)

September 12, 2003pixies reunion!It's happening. I'm so excited I just soiled myself. Apparently pigs do fly afterall. Pixies To Reunite For Tour In April 2004; New Album Possible The Pixies. Are back. Together. Music is saved. Lovers of rock, unite and cheer. This is going to truly own.

Posted by jheer at 07:44 PM

| Comments (0)

$87,000,000,000How much is 87 bill-ion (raise pinky to mouth), the sum Bush is requesting for continued action in Iraq? TOMPAINE.com - What Can $87 Billion Buy? I think we owe the people of Iraq for what we have done, and so would like to see us honor the commitment we've entered into by virtue of invading, but it is always useful to have a little perspective on the costs.

Posted by jheer at 03:10 PM

| Comments (0)

dating hellA friend pointed me towards this craigslist entry. Oy vey.

Posted by jheer at 11:06 AM

| Comments (0)

rip johnny cashI awoke to tragic news this morning. Music Legend Johnny Cash Dies at 71

Posted by jheer at 09:56 AM

| Comments (2)

September 11, 2003how rich are you?... find out at the Global Rich List. Turns out that at my former job, I was pulling in enough to be the 46,777,565th richest person in the world (go ahead and infer my former salary from that...). A little playing around found that if you pull in $868 a year, you are in the middle of the pack. How do they determine the numbers? Look here for the answer.

Posted by jheer at 03:30 PM

| Comments (0)

tech newsThe recent TechNews had some interesting HCI related stories... One on haptics: Touch Technology Comes of Age Online and another on the fog screen demoed at SIGGRAPH this year: Plugged In: Making a Video Screen Out of Thin Air

Posted by jheer at 10:51 AM

| Comments (0)

nyc pollutedA story on Salon today pointed my attention to a month-old story about the toxic fallout of the WTC collapse. Most frightening is the EPA's initial report that no one was in danger, without having performed the requisite testing. Conscious companies ran their own tests and found differently, forcing the EPA to retest and change their results. This string of affairs has enraged numerous New Yorkers, and is motivating Hillary's stalling of the new EPA nomination. Apparently, all post 9/11 communications from the EPA had to be routed through the National Security Council, casting shadows on the White House's role. While there is certainly a lot of interesting politics at work here, my biggest hope is that the people of New York City get the attention, care, and prevention that they need. Here are some excerpts for my own reference: For months after the attacks, the U.S. Environmental Protection Agency insisted that the dust contained few contaminants and posed little health risk to anyone but those caught in the initial plume from the towers' collapse. "Everything we've tested for, which includes asbestos, lead, and volatile organic compounds, have been below any level of concern for the general public health," Christine Todd Whitman, then the Bush administration's EPA chief, told PBS "NewsHour" in April 2002. Even last December, assistant regional EPA administrator Kathleen Callahan reiterated that assessment before the New York City Council: "I think the results that we're getting back show that there isn't contamination everywhere." But Deutsche Bank's owners, curious to know the extent of their liability and to properly evaluate the potential danger to their own employees, privately conducted their own extensive tests. The findings: Astronomical levels of asbestos and a long list of toxic ingredients that pose a significant risk of cancer, birth defects, nerve damage and other ominous health problems. The cloud of pulverized debris was a virtual soup of toxic substances: Cancer-causing asbestos. PCBs -- one of the most toxic and dangerous industrial chemicals -- from a giant electrical transformer and waste oil. Mercury, which can cause nerve damage and birth defects, from the thousands of laptop computer screens that were atomized that day. Thousands of tons of pulverized concrete, which can sear the soft membrane of the lungs. Dioxin, which can damage the central nervous system and cause birth defects. The EPA's final report on air quality released in January 2003 called the Trade Center collapse the largest single release of dioxin in world history -- more than enough, on its own, to establish lower Manhattan as a federal Superfund site. Immediately after the towers collapsed, and as fires burned for days afterward, that cloud filtered its way through window seals and ventilation ducts of thousands of buildings, even those thought to be undamaged and safe. It accumulated in the corners of homes, behind bookcases and under beds. Even today, it is in the carpeting of schools and on the desktops of offices, in the ventilation systems of many buildings that have not been cleaned. Under fire from residents, businesses and local officials, the EPA finally reversed its position in May 2002 and launched an effort to clean 6,000 residences. But the program was voluntary, outreach was dismal, and it addressed dust issues in less than 20 percent of the apartments in lower Manhattan. It did nothing to ensure that the thousands of offices, stores, restaurants and other business spaces in the district were safe for human occupation. Even the EPA's in-house inspector general, in a draft of a report due out next month, slammed the agency for erroneously reassuring residents and workers that all was well in the days after the attacks. Citing information from a high-ranking EPA official, the draft report said agency statements in the days after the terrorist attacks were "heavily influenced" by environmental advisors in Bush's White House.

Posted by jheer at 10:41 AM

| Comments (0)

September 10, 2003freedomI am done with my prelims!! I think I did alright... we'll see what happens. One of the questions, though, was about information visualization, in particular focus+context visualizations. Those of you familiar with my work know that I had a real tough time with that one :). So enough school, time to hit the town and have some fun... Here's a smattering of this weekend's upcoming events. Come join me if you can! 9/10: Mexican wrestling at SFMOMA! 7:30pm 9/11: Punk rock with our Sacromentan friends, the Helper Monkeys! The show is at the Parkside in SF. 9/13: The White Stripes in concert here in Berkeley!

Posted by jheer at 04:53 PM

| Comments (1)

September 09, 2003paper: interface metaphorsInterface Metaphors and User Interface Design This paper examines the use of metaphor as a device for framing and understanding user interface designs. It reviews operational, structural, and pragmatic views on metaphor and proposes a metaphor design methodology. In short the operational approach concerns the measurable behavioral effects of applying metaphor; structural analyses attempt to define, formalize, and abstract metaphors; and the pragmatic approach views metaphors in context – including the goals motivating metaphor use and the affective effects of metaphor. The proposed design methodology consists of 4 phases: identifying candidate metaphors, elaborating source and target domain matches, identifying metaphorical mismatches, and finally designing fixes for these mismatches. Strangely, this paper makes absolutely no mention whatsoever of George Lakoff’s influential work on conceptual metaphor, which I’m almost certain had been published prior to this article. My outlined notes follow below.

Posted by jheer at 03:53 PM

| Comments (1)

September 08, 2003berkeley wificheesebikini? has started a catalog of WiFi access points in Berkeley. It's slim pickins for the time being, especially compared to San Francisco, but good to know. Hopefully us Berkeleyites will see the list blossom a bit more in the near future... cheesebikini?: Free Berkeley Wi-Fi Cafes

Posted by jheer at 07:54 PM

| Comments (0)

analyze thisAfter reading metamanda's post about personality testing, I decided I would kill a little time by taking an exam myself. I discovered that I am apparently of type ENTJ, or "Extroverted iNtuitive Thinking Judging", with measurements of Extroverted: 1%, Intuitive: 33%, Thinking 11%, and Judging: 67%. In other words, I am very balanced with respect to my "vertedness", but make lots of judgments. I'm obviously quite suspect of the accuracy of such exams. But here I go making a judging statement... you just can't win. More (or less, depending on how geeky you are) interestingly, I also noticed a weird effect with the font color on the page describing my personality type. It seems to involve text on a light background with hex color #660066 (that's 102,0,102 RGB for you base-10 types, or "darker magenta" for everyone else). Now either go to the page in question or check out the text below and start scrolling your web browser up and down: The quick brown fox jumped over the lazy dogs Do you notice the font getting brighter, more purple-y? If so, you probably are viewing on some form of LCD monitor. When I first encountered this, it weirded me out, so I tried it in a score of applications and it all worked (placing the text on a black background, however, killed the effect). I thought either I had found a cool trick of the human visual system, or a property of my display device. An unsuccessful attempt to replicate the test on a CRT monitor confirmed that the display was most likely the culprit. For reference, I'm running on an IBM Thinkpad T23, and other Thinkpad laptops have shown themselves capable of producing the effect. A web search on the topic has not revealed anything yet, though in the process I did find that Darker Cyan (006666) seems to do the trick as well. If you get the effect too, or better yet, know something relevant about LCD display technology, post a comment. My rough guess is that there's something about the varying response of the LCD that causes the effect to happen.

Posted by jheer at 04:03 PM

| Comments (1)

paper: hci and disabilitiesHuman Computer Interfaces for People with Disabilities Human computer interface engineers should seriously consider the problems posed by people with disabilities, as this will lead to a more widespread understanding of the true nature and scope of human computer interface engineering in general.

Posted by jheer at 12:02 PM

| Comments (0)

d'oh!Salon.com Life | Dope-seeking teens call cops by mistake I fear for the children. Moreover I fear for myself, surrounded in a world of such children.

Posted by jheer at 10:31 AM

| Comments (0)

September 06, 2003paper: contextual inquiryContextual Design: Contextual Inquiry (Chapter 3) This article discusses in depth the contextual inquiry phase of the contextual design methodology. Contextual inquiry emphasizes interacting directly with workers at their place of work within the constructs of a master/apprentice relationship model in order for designers to gain a real insight into the needs and work practices of their users.

Posted by jheer at 01:32 PM

| Comments (2)

paper: contextual designContextual Design: Introduction (Chapter 1) This book chapter introduces the difficulties of customer centered design in organizations, and proposes the methodology of Contextual Design as a set of processes for overcoming these difficulties and achieving successful designs that benefit both the customer and the business.

Posted by jheer at 01:27 PM

| Comments (0)

paper: rapid ethnographyRapid Ethnography: Time-Deepening Strategies for HCI Field

Research HCI has come to highly regard ethnographic research as a useful and powerful methodology for understanding the needs and work practices of a user population. However, full ethnographies are also notoriously time and resource heavy, making it hard to fit into a deadline-driven development cycle. This paper presents techniques for rapid, targeted ethnographic work, in the hopes of accruing much of the benefit of field work while still fitting within acceptable time bounds. The paper organizes its suggestions around three core themes:

Posted by jheer at 01:23 PM

| Comments (0)

paper: 2D fitt's lawExtending Fitt’s Law to Two-Dimensional Tasks This paper extends the famous Fitt’s Law for predicting human movement times to work accurately in two-dimensional scenarios, in particular rectangular targets. The main finding of the paper is that two models, one which models target width by projecting along the vector of approach and another which uses the minimum of the width or height achieved equal statistical fits, and showed a significant benefit over models which used (width+height), (width*height), and (width-as-horizontal-distance-only) models. For those who don’t know, Fitt’s Law is an empirically validated law that describes the time it takes for a person to perform a physical movement, parameterized by the distance to the target and the size of the target. It’s formula is one-dimensional: it only considers movement along a straight line between the start and the target. The preferred formulation of the law is the Shannon formulation, so named because it mimics the underlying theorem from Information Theory -- MT = a + b log_2 (A/W + 1) Where MT is the movement time, A is the target distance or amplitude, W is the target width, and a and b are constants empirically determined by linear regression. The log term is known as the Index of Difficulty (ID) of the task at hand and is in units of bits (note the typo in the paper). The Shannon formulation is preferred for a number of reasons

This paper then considers two-dimensional cases. Clearly you can cast the movement along a one-dimensional line between start and the center of the target, and the amplitude is the Euclidean distance between these points. But what to use as the width term? Historically, the horizontal width was just used, but this seems like an unintuitive choice in a number of situations, particularly when approaching the target from directly above of below. This paper studies five possibilities: Using the minimum of the width and distance (“smaller-of”), using the projected width along the angle of approach (“w-prime”), using the sum of the dimensions (“w+h”), using the product of the dimensions (“w*h”), and using the historical horizontal width (“status quo”). The study varied amplitude and target dimensions crossed with 3 approach angles (0, 45, and 90 degrees). Twelve subjects were used, who performed 1170 trials each over four days of experiments. The results found the following ordering among the models in terms of model fit: smaller-of > w-prime > w+h > w*h > status quo. Notably, the smaller-of and w-prime cases were quite close – their differences were not statistically significant. The w-prime case is theoretically attractive, as it cleanly retains the one-dimensionality of the model. The smaller-of model is attractive in practice as it doesn’t depend on the angle of approach, and so require one less parameter than w-prime. The w-prime model. However, doesn’t require that the targets be rectangular as the smaller-of model assumes. Finally, it should be noted that these results may be slightly inaccurate in the case of big targets, as the target point is assumed to be in the center of the target object. In many cases users may click on the edge, decreasing the amplitude.

Posted by jheer at 01:18 PM

| Comments (0)

September 05, 2003paper: other ways to programDrawing on Napkins, Video-Game Animation, and Other Ways to Program Computers This article describes a number of visual, interactive methods to programming. The main thesis is that visual programming environments have failed to date because they are not radical enough. Programs exhibit dynamic behavior that static visuals do not always convey appropriately and so dynamic visuals, or animation, should be applied. Furthermore, visual programming can avoid explicit abstraction (i.e. when visuals become just another stand in for symbols in a formal system) without necessarily sacrificing power and expressiveness. Put more abstractly, a programming language can be designed to use one of many possible syntactical structures. It then becomes the goal of the visual programming developer to find the appropriate syntax that can be mapped to the desired language semantics. To map an existing computer language (e.g., C or LISP) into a visual form would require the use of a visual syntax isomorphic to the underlying language. Doing so in a useful, intuitive, and learnable manner proves quite difficult. Kahn describes a number of previous end-user programming systems. This includes AlgoBlocks, which allow users to chain together physical blocks representing some stage of computation. The blocks support parameterizations on them, and afford collaborative programming. Another system is Pictorial Janus, which uses visual topological properties such as containment, touching, and connection to (quite abstractly, in my view) depict program performance. He goes on to describe a (quite imaginative) virtual programming "world", ToonTalk, which can be used to construct rich, multi-threaded applications using a video-game interaction style. The ToonTalk world maps houses to threads or processes, and the robots that can inhabit houses are the equivalent of methods. Method bodies are determined by actually showing the robot what to do. Data such as numbers and text are represented as number pads, text pads, or pictures that can be moved about, put into boxes (arrays or tuples), or operated upon with "tools" such as mathematical functions. Communication is represented using the metaphor of birds -- hand a bird a box, and they will take it to their nest at the other house, making it available for the robots of that abode to work with the data. Kahn argues that while such an environment may be slower to use for the adept programmer, it is faster to learn, and usable even by young children. It also may be more amenable to disabled individuals. Furthermore, its interactive animated nature (you can see your program "playing out" in the ToonTalk world) aids error discovery and debugging. In conclusion, Kahn suggests that these techniques and others (e.g. speech interfaces) could be integrated into the current programming paradigm to create a richer, multimodal experience that plays off different media for constructing the appropriate aspects of software. Inspiring, yes, but quite difficult to achieve. My biggest question of the moment is: what happened to Ken Kahn? The article footer says he used to work at PARC until 92, and then focused on developing ToonTalk full-time. I'll have to look him up on the Internet to discover how much more progress he made. While I'm skeptical of these techniques being perfected and adopted in production-level software engineering in the near future, I won't be surprised if they experience a renaissance in ubiquitous computing environments, in which everyday users attempt to configure and instruct their "smart" environs. If nothing else, VCRs could learn a thing or two...

Posted by jheer at 12:01 AM

| Comments (0)

paper: prog'ing by exampleTonight I read a block of papers on end-user programming, aka Programming by Example (PBE), aka Programming by Demonstration (PBD). Very fun stuff, and definitely got me thinking about the kind of toys I would want any future children of mine to be playing with. Eager: Programming Repetitive Tasks By Example This paper introduces Cypher's Eager, a programming by example system designed for automating routine tasks in the HyperCard environment. It works by monitoring users actions in HyperCard and searching for repetitive tasks. When one is discovered it presents an icon, and begins highlighting what it expects the user's next action to be - an interaction technique Cypher dubs "anticipation". This allows the user to interactively - and non-abstractly - understand the model the system is building of user action. When the user is confident that Eager understands the task being performed, the user can click on the Eager icon and let it automate the rest of the iteration. For example, it can recognize the action of copying and pasting each name in a rolodex application into a new file, and completely automate the task. Eager was written in LISP, and communicated to HyperCard over interprocess communication. When a recognized pattern is executed, Eager actually constructed the corresponding HyperCard program (in the language HyperTalk) and passed it back to the HyperCard environment for execution. There are a couple of crucial things that make Eager successful. One is that Eager tries only to perform simple repetitive tasks... there are no conditionals, no advanced control structures. This simplifies the both the generalization problem and the presentation of system state to the user. Second, Eager uses higher-level domain knowledge. Instead of low-level mouse data, Eager gets semantically useful data from the HyperCard environment, and furthermore has domain knowledge about HyperCard, allowing it to better match usage patterns. Finally, Eager has the appropriate pattern matching routines programmed in, including numbering and iteration conventions, days of the week, as well as non-strict matching requirements for lower-level events, allowing it to recognize higher-level patterns (ones with multiples avenues of accomplishment) more robustly. The downside, as I see it, however, is that for such a scheme to generalize across applications you either have to (a) reprogram for every application or (b) designers must equip each program not only with the ability to report high-level events in a standardized fashion, but to communicate application semantics to the pattern matcher. Introducing more advanced applications with richer control structures muddies this further. That being said, such a feature could be invaluable in integrated, high-use applications such as Office or popular development environments. Integrating such a system into the help, tutorial, and mediation features already existant in such systems could be very useful indeed.

Posted by jheer at 12:00 AM

| Comments (0)

September 04, 2003paper: charting ubicomp researchCharting Past, Present, and Future Research in Ubiquitous

Computing This paper reviews ubiquitous computing research and suggests future directions. The authors present four dimensions of scale for characterizing ubicomp systems: device (inch, foot, yard), space (distribution of computation in physical space), people (critical mass acceptance), and time (availability of interaction). Historical work is presented and categorized under three interaction themes: natural interfaces (e.g. speech, handwriting, tangible UIs, vision), context-aware applications (e.g. implicit input of location, identity, activity), and automated capture and access (e.g. video, event logging, annotation). The authors then suggest a fourth, encompassing research theme of everyday computing, characterized by diffuse computational support of informal, everyday activities. This theme suggests a number of new pressing problems for research: continuously present computer interfaces, information presentation at varying levels of the periphery of human attention, bridging events between physical and virtual worlds, and modifying traditional HCI methods for informal, peripheral, and opportunistic behavior. Additional issues include how to evaluate ubicomp systems (for which the authors suggest CSCW-inspired, real-world deployment and long-term observation of use) and how to cope with the various social implications, both due to privacy and security and to behavior adaptation. In addition to the useful synopsis and categorization of past work, I thought the real contribution of this paper was the numerous suggestions for future research, many of which are quite important and inspiring. I was also very happy to see that many of the lessons of CSCW, which are particularly relevant to ubicomp, were influencing the perspective of the authors. However, on the critical side a couple things struck me. One is that many of the suggestions of research are lacking some kind of notion of how "deep" the research problem runs. For example, the research problems in capture and access basically summarize both the meta-data and retrieval problems, long-standing fundamental issues in the multimedia community. However, this depth and extent of the research issue, or how we might skirt the fundamental issues by domain-specificity, is not mentioned. Another issue I had was that I felt the everyday computing scenario might have used some fleshing out. I wanted the authors to provide me with the compelling scenario they say such research mandates. Examples were provided, so perhaps I am being overly critical, but I wanted a more concrete exposition, perhaps along the lines of Weiser's Sal scenario. See the extended entry for a more thorough summary

Posted by jheer at 02:50 PM

| Comments (0)

xp vs. idAn esteemed colleague passed along this interview concerning design processes and the men who invented them... Extreme Programming vs. Interaction Design Here's the blurb: Some excerpts: Cooper: When you put those two constituencies together, they come up with a solution that is better than not having those two constituencies together, I grant that. But I don't believe that it is a solution for the long term; I believe that defining the behavior of software-based products and services is incredibly difficult. It has to be done from the point of view of understanding and visualizing the behavior of complex systems, not the construction of complex systems. ...So when I talk about organizational change, I'm not talking about having a more robust communication between two constituencies who are not addressing the appropriate problem. I'm talking about incorporating a new constituency that focuses exclusively on the behavioral issues. And the behavioral issues need to be addressed before construction begins. --------------- Cooper: Building software isn't like slapping a shack together; it's more like building a 50-story office building or a giant dam. Beck: I think it's nothing like those. If you build a skyscraper 50 stories high, you can't decide at that point, oh, we need another 50 stories and go jack it all up and put in a bigger foundation. Cooper: That's precisely my point. Beck: But in the software world, that's daily business. Cooper: That's pissing money away and leaving scar tissue. Beck: No. I'm going to be the programming fairy for you, Alan. I'm going to give you a process where programming doesn't hurt like that—where, in fact, it gives you information; it can make your job better, and it doesn't hurt like that. Now, is it still true that you need to do all of your work before you start? --------------- Cooper: I'm advocating interaction design, which is much more akin to requirements planning than it is to interface design. I don't really care that much about buttons and tabs; it's not significant. And I'm perfectly happy to let programmers deal with a lot of that stuff. ---------------- Beck: The interaction designer becomes a bottleneck, because all the decision-making comes to this one central point. This creates a hierarchical communication structure, and my philosophy is more on the complex-system side—that software development shouldn't be composed of phases. If you look at a decade of software development and a minute of software development, you ought to have a process that looks really quite similar, and XP satisfies that. And, secondly, the appropriate social structure is not a hierarchical one, but a network structure. ------------------ Cooper: Look. During the design phase, the interaction designer works closely with the customers. During the detailed design phase, the interaction designer works closely with the programmers. There's a crossover point in the beginning of the design phase where the programmers work for the designer. Then, at a certain point the leadership changes so that now the designers work for the implementers. You could call these "phases"—I don't—but it's working together. Now, the problem is, a lot of the solutions in XP are solutions to problems that don't envision the presence of interaction design—competent interaction design—in a well-formed organization. And, admittedly, there aren't a lot of competent interaction designers out there, and there are even fewer well-formed organizations. Beck: Well, what we would call "story writing" in XP—that is, breaking a very large problem into concrete steps from the non-programming perspective still constitutes progress—that is out of scope for XP. But the balance of power between the "what-should-be-done" and the "doing" needs to maintained, and that the feedback between those should start quickly and continue at a steady and short pace, like a week or two weeks. Nothing you've said so far—except that you haven't done it that way—suggests to me that that's contradictory to anything that you've said. --------------------- Cooper: The problem is that interaction design accepts XP, but XP does not accept interaction design. That's what bothers me... The instant you start coding, you set a trajectory that is substantially unchangeable. If you try, you run into all sorts of problems, not the least of which is that the programmers themselves don't want to change. They don't want to have to redo all that stuff. And they're justified in not wanting to have to change. They've had their chain jerked so many times by people who don't know what they're talking about. This is one of the fundamental assumptions, I think, that underlies XP—that the requirements will be shifting—and XP is a tool for getting a grip on those shifting requirements and tracking them much more closely. Interaction design, on the other hand, is not a tool for getting a grip on shifting requirements; it is a tool for damping those shifting requirements by seeing through the inability to articulate problems and solutions on management's side, and articulating them for the developers. It's a much, much more efficient way, from a design point of view, from a business point of view, and from a development point of view. --------------- Cooper: The interaction designers would begin a field study of the businesspeople and what they're trying to accomplish, and of the staff in the organization and what problems they're trying to solve. Then I would do a lot of field work talking to users, trying to understand what their goals are and trying to get an understanding of how would you differentiate the user community. Then, we would apply our goal-directed method to this to develop a set of user personas that we use as our creation and testing tools. Then, we would begin our transformal process of sketching out a solution of how the product would behave and what problems it would solve. Next, we go through a period of back-and-forth, communicating what we're proposing and why, so that they can have buy-in. When they consent, we create a detailed set of blueprints for the behavior of that product. As we get more and more detailed in the description of the behavior, we're talking to the developers to make sure they understand it and can tell us their point of view. At a certain point, the detailed blueprints would be complete and they would be known by both sides. Then there would be a semiformal passing of the baton to the development team where they would begin construction. At this point, all the tenets of XP would go into play, with a couple of exceptions. First, while requirements always shift, the interaction design gives you a high-level solution that's of a much better quality than you would get by talking directly to customers. Second, the amount of shifting that goes on should be reduced by three or four orders of magnitude. Beck: The process ... seems to be avoiding a problem that we've worked very hard to eliminate. The engineering practices of extreme programming are precisely there to eliminate that imbalance, to create an engineering team that can spin as fast as the interaction team. Cooperesque interaction design, I think, would be an outstanding tool to use inside the loop of development where the engineering was done according to the extreme programming practices. I can imagine the result of that would be far more powerful than setting up phases and hierarchy.

Posted by jheer at 11:26 AM

| Comments (2)

September 03, 2003paper: why and when five users aren't enoughWhy and When Five Test Users aren’t Enough This paper argues that Nielsen’s assertion that “Five Users are Enough” to determine 85% of usability problems does not always hold up. In the end, we walk away with the admonition that five users may or may not be enough. Richer statistical models are needed, as well good frequency and severity data. What does this mean for evaluators? Certainly this shouldn’t dissuade the use of usability evaluations, but it does imply that one should avoid false confidence and keep an eye to user/evaluator variability. The paper starts by attacking the formula ProblemsFound(i) = N ( 1 – ( 1 – lambda ) ^ i ), in particular, the straightforward use of parameter (lambda = .31). Generalizing the formula shows we should actually expect, for n participants, that ProblemsFound(n) = sum(j=1…N) ( 1 – ( 1 – lambda_j) ^ n ), Where lambda_j is the probability of discovering usability problem j. Nielsen and Landauer’s formula assumes this probability is equal for all such problems (computed as lambda = the average of such empirically observed probabilities). However, other studies, such as that by Spool and Schroeder, have found an average lambda of 0.081, showing that a study with ecologically valid tasks (in this case an unconstrained online shopping task with high N) can still miss many usability issues. Thus Nielsen’s claim that five is enough is only true under certain assumptions of problem discovery. But other issues also abound. For instance, Nielsen’s model doesn’t take into account the variance between users, which can strongly affect the number of users needed. Further complications abound when considering severity ratings, as the authors found huge shifts in severity ratings based on different selections of five users. Other problems include which tasks are used for the evaluation (changes of task revealed undiscovered usability issues) and issues with usability issue extraction, determining the true value of N.

Posted by jheer at 07:16 PM

| Comments (4)

paper: heuristic evaluationHeuristic Evaluation This paper describes the famous (in HCI circles) technique of Heuristic Evaluation, a discount usability method for evaluating user interface designs. HEs are conducted by having an evaluator walk through the interface, identifying and labeling usability problems with respect to a list of heuristics (listed below). It is usually recommended that multiple passes be made through the interface, so that evaluators can get a larger, contextual view of the interface, and then focus on the nitty-gritty details. Revised Set of Usability Heuristics

The evaluators also go through a round of assigning severity ratings to all discovered usability problems, allowing designers to prioritize fixes. The severity is a mixture of frequency, impact, and persistence of an identified problem, and as presented forms a spectrum from 0-4, where 0 = Not a usability problem, 1 = Cosmetic problem only, 2 = Minor problem, 3 = Major problem, 4 = Usability catastrophe. Nielsen performs an analysis to show that inter-evaluator ratings have better-than-random agreement, and so ratings can be aggregated to get reliable estimates of severity. Heuristic evaluation is cheap and can be done by user interface experts (i.e., they can be performed without bringing in outside users). Best results are experienced by evaluators that are familiar both with usability testing and the application domain of the evaluated interfaces. HE is faster and less costly than typical user studies, with which it can be used in conjunction (i.e. use HE first to filter out problems, then run a real user study to find remaining deeper seated issues). Lacking real user input, however, HE can run the risk of missing, or misestimating, usability infractions. Nielsen found over multiple studies that the typical evaluator found only 31 percent (lambda = .31) of known usability problems in an interface. Using the model that ProblemsFound(i) = N ( 1 – ( 1 – lambda ) ^ i ), Where i is the number of evaluators and N is the total number of problems, we can arrive at the conclusion that 5 evaluators are enough to find 84% of usability problems. Nielsen also performs a cost-benefit analysis that finds 4 as the optimal number. Read the summary of the Woolrych and Cockton paper for a dissenting opinion.

Posted by jheer at 06:49 PM

| Comments (0)

paper: your place or mine?Finishing off my block of CSCW papers is Dourish, Belotti, et al's article on the long-term use and design of media spaces. Your Place or Mine? Learning from Long-Term Use of Audio-Video Communication This article reviews over 3 years of experience using an open audio-video link between the authors' offices to explore media spaces and remote interaction. The paper details the evolution of new behaviors in response to the communication medium, both at the individual and social levels. For example, the users learned to stare at the camera to initiate eye contact, but later learned to do without this but still establish attention. Also, colleagues would come to an office to speak to the remote participant.

I saw some important take home lessons here:

My full outlined summary follows...

Posted by jheer at 06:03 PM

| Comments (0)

post-modern presidentJust read this interesting article: The Post-Modern President: Deception, Denial, and Relativism: what the Bush administration learned from the French. Read the extended entry for some excerpts from the article. The article also links to an amusing report measuring the "Mendacity Index", or how much and what severity of falsehood emanated from the last four presidents. Their rankings (in order of the biggest to smallest liar) are: G. W. Bush, Reagan, Bush Sr., and then Clinton. Perhaps Clinton went for the quantity over quality approach.... Excerpts from the post-modern president: If you're a revisionist - someone pushing for radically changing the status quo - you're apt to see "the experts" not just as people who may be standing in your way, but whose minds have been corrupted by a wrongheaded ideology whose arguments can therefore be ignored. To many in the Bush administration, 'the experts' look like so many liberals wedded to a philosophy of big government, the welfare state, over-regulation and a pussyfooting role for the nation abroad. ...In that simple, totalizing assumption we find the kernel of almost every problem the administration has faced over recent months--and a foretaste of the troubles the nation may confront in coming years. By disregarding the advice of experts, by shunting aside the cadres of career professionals with on-the-ground experience in these various countries, the administration's hawks cut themselves off from the practical know-how which would have given them some chance of implementing their plans successfully. In a real sense, they cut themselves off from reality. ...Everyone is compromised by bias, agendas, and ideology. But at the heart of the revisionist mindset is the belief that there is really nothing more than that. Ideology isn't just the prism through which we see world, or a pervasive tilt in the way a person understands a given set of facts. Ideology is really all there is. For an administration that has been awfully hard on the French, that mindset is...well, rather French. They are like deconstructionists and post-modernists who say that everything is political or that everything is ideology. That mindset makes it easy to ignore the facts or brush them aside because "the facts" aren't really facts, at least not as most of us understand them. If they come from people who don't agree with you, they're just the other side's argument dressed up in a mantle of facticity. And if that's all the facts are, it's really not so difficult to go out and find a new set of them. The fruitful and dynamic tension between political goals and disinterested expert analysis becomes impossible.

Posted by jheer at 03:11 PM

| Comments (0)

September 02, 2003synesthesia and the binding problemWhile I was an undergrad at Cal, I worked for a semester in the Robertson Visual Attention Lab with graduate student Noam Sagiv, researching synesthesia - a phenomenon in which the stimulation of one sensory modality reliably causes a perception in one or more different senses. Examples include letters and numbers having colors, sounds eliciting images (no psychedelics necessary!), and touch causing tastes. Some of our research shed some light on what is known as the binding problem - the mismatch between our unified conscious experience of the world and the fairly well established fact that sensory processing occurs in distinct, specialized regions of the brain (e.g., a color area, a shape area, etc). I recently stumbled across this review article in which Lynn Robertson, head of the lab I had worked for, reviews these issues including some of the findings of our work. If you're into cognitive science, it's worth checking out! You can scope the summary or the full article.

Posted by jheer at 10:41 PM

| Comments (0)

paper: computers, networks, and workComputers, Networks, and Work This article describes the early adoption of networked communication (e.g. e-mail) into the workplace. The often surprising social implications of networking began with the ARPANET, precursor of the modern internet. E-mail was originally considered a minor additional feature, but rapidly became the most popular feature of the network. We see immediately an important observation regarding social technologies: they are incredibly hard to predict. In organizations that provided open-access to e-mail (i.e. without managerial restrictions in place), some thought that electronic discussion would improve the decision making process, as conversations would be “purely intellectual… less affected by people’s social skills and personal idiosyncracies.” The actual results were more complicated. Text-only conversation has less context cues (including appearance and manner) and weakened inhibitions. This has led to more difficult decision making, due to a more democratic style in which strong personalities and hierarchical relationships are eroded. While giving a larger voice to typically quieter individuals, lowered social inhibitions in electronic conversation is also prone to more extreme opinions and anger venting (e.g. “flaming”). One study even shows that people who consider themselves unattractive report higher confidence and liveliness over networked communication. Given these observations, the authors posit a hypothesis: when cues about social context are weak or absent, people ignore their social situation and cease to worry about how others evaluate them. In one study, people self-reported much more illegal or undesirable behaviors over e-mail than when given the same study on pen and paper. In the same vane, traditional surveys of drinking account for only half of known sales, yet an online survey results matched more accurately the sales data than face-to-face reports. The impersonality of this electronic media ironically seems to engender more personal responses. Networked communication has also been known to affect the structure of the work place. A study found that a networked work group, compared to a non-networked group, created more subcommittees and had multiple committee roles for group members. These networked committees were also designed in a more complex, overlapping structure. Networked communication also presents new opportunities for the life of information. Questions or problems can be addressed by other experienced employees, often from geographically disparate locations, allowing faster response over greater distance. Furthermore, by creating a protocol for saving and categorizing such exchanges, networked media can remember this information, increasing the life of the information and making it available to others. As the authors illustrate, networked communication showed much promise at an early age. However, it doesn’t always come as expected or for free. The authors note the issue of incentive… shared communication must be beneficial to all those who would be using it for adoption to be successful. Also it may be the case that managers will end up managing people they have never met… hinting at the common ground problem described by the Olsens [Olsen and Olsen, HCI Journal, 2000]. Coming back to the authors’ hypothesis also raises one exciting fundamental question. As networked communication becomes richer, social context will begin to re-appear, modifying the social impact of the technologies. As this richer design space emerges, how can we utilize it to achieve desired social phenomena in a realm that is so prone to unpredictability?

Posted by jheer at 10:22 PM

| Comments (0)

paper: distance mattersDistance Matters This paper examines and refutes the myth that remote cooperative technology will remove distance as a major factor effecting collaboration. While technologies such as videoconferencing and networking allow us to more effectively communicate and collaborate across great distances, the author's argue that distance will remain an important factor for the forseeable future, regardless of how sophisticated the technology becomes. This paper reviews results of studies concerning both collocated and distant collaborative work, and extracts four concepts through which to understand collaborative processes and the adoption of remote technologies: common ground, coupling, collaboration readiness, and technology readiness. The case is then made that because of these issues and their interactions, distance will continue to have a strong effect on collaborative work processes.

Posted by jheer at 10:20 PM

| Comments (0)

paper: groupware and social dynamicsKicking off a batch of papers on Computer-Supported Cooperative Work (CSCW) is Grudin's list of challenges to the CSCW developer... Groupware and Social Dynamics: Eight Challenges for Developers Groupware is introduced as software which lies in the midst of the spectrum between single-user applications and large organizational information systems. Examples include e-mail, instant messaging, group calendaring and scheduling, and electronic meeting rooms. The developers of groupware today come predominantly from a single-user background, and hence many do not realize the social and political factors crucial to developing groupware. Grudin outlines 8 major issues confronting groupware development and gives some proposed solutions. The disparity between who does the work and who gets the benefit Critical Mass and Prisoner’s Dilemma Problems Social, Political, and Motivational Factors Exception Handling in Workgroups Designing for Infrequently Used Features The Underestimated Difficulty of Evaluating Groupware The Breakdown of Intuitive Decision-Making Managing Acceptance: A New Challenge for Product Developers Take home messages from the paper: groupware should strive to directly benefit all group members, build off of existing successful apps if possible, develop thoughtful adoption strategies, and be rooted in an understanding of the [physical|social|political] environment of use.

Posted by jheer at 09:49 PM

| Comments (0)

whither heerforce?My blog has been rather silent as of late... I got a little pre-occupied with moving back to Berkeley, starting up school, and wrestling with SBC to get DSL up and running out here... now I'm swamped in prelims studying and upcoming conference deadlines, but much to the joy of the 5 or so people who actually read this, I will try to post when I can. Though I warn you, with prelims coming up next week, there may only be a large number of paper summaries in the works...

Posted by jheer at 09:37 PM

| Comments (2)

|

| jheer@acm.ørg |